Research

Publications

The team is currently working on a submission draft for IndustrialKG 2017 and if required please contact the team members directly for the latest iteration of the work. The technical report for the coursework is uploaded below.

Technical Report

Rashid, S., Viswanathan, A., & Gross, I. (2017). Applying Web Semantics to Evaluate Biomedical Knowledge Graphs. Ontology Engineering, 1-12. Technical Report. "Download Technical Report"

Publication

Rashid, S., Viswanathan,A., Gross, I., Kendall, E., & McGuinness, D.L. (2017). Leveraging Semantics for Large-Scale Knowledge Graph Evaluation. Workshop on Industrial Knowledge Graphs (Industrialkg2017) , 1-6. Submitted "Download submitted version"

Presentations

Here are presentations given in the Ontology Engineering class:

- "Assignment 11 Presentation"

- "Assignment 8 Presentation"

- "Assignment 7 Presentation"

- "Assignment 3 Presentation"

Related Work and References

Knowledge Graph quality evaluation is an important task for any information intensive science. This work builds on methods for evaluating large scale ontologies and RDF based knowledge graphs.

Ontology Concept Source

Concepts and references for the PubMed data extraction system (ODIN) and its output can be found below:

- ODIN Toolkit

- Reach Datasets

- These resources are maintained by the Computational Language Understanding Lab (CLU Lab) at University of Arizona

Inspiration and Related Work

- Ontology Evaluation Survey - Brank: A survey of evaluation techniques presented in a survey by Brank discusses ontology evaluation in terms of categories, such as methods using a Golden Standard of evaluation based on comparison to another reference ontology, comparison with domain data and human assessment. Furthermore, the authors introduce a level based approach to evaluation, where evaluation is split into separate layers which may focus on vocabulary, hierarchy structure, syntax or semantic relations and references to other ontologies.

- Ontology Evaluation Survey - Zaveri: A comprehensive survey on current Linked Data Quality by Zaveri states that Knowledge Graph evaluation focuses on evaluating the quality of RDF graphs by accessibility, availability, syntactic validity and conciseness.

- Ontology Evaluation - Chimera: Chimera provided a web-based user interface that supports automated merging of multiple ontologies and diagnosis for possible inconsistencies. Furthermore, Chimaera provided tests that included incompleteness and taxonomic analysis, as well as semantic and syntactic checks.

- Ontology Evaluation - OOPS!: The OntOlogy Pitfall Scanner! tool uses a RESTful interface to ask users to input their ontology in an easy to understand way, targeting both newcomers and domain experts unfamiliar with ontology building and providing them a list of pitfalls in ontology creation and management.

- Data Evaluation - Tao, J., Ding: Tao et. al worked on a system to extend OWL for instance data evaluation. In this work the authors distinguish between knowledge management schemas and data, where the former corresponds to ontologies while the latter refers to instances. Tao et. al design a general evaluation process for instance data based on three inconsistency categories: Syntax Errors, Logical Inconsistencies and Possible Issues.

- Ontology Evolution - Evolva: In Ontology Evolution with Evolva, the tool aims to cover the adaptation of ontologies, the management of these changes, and usage of background knowledge sources to reduce user involvement in the ontology adaptation step. The Evolva NeOn toolkit plugin uses the framework of Evolva to showcase the system.

Use Case References

- Brank, J., Grobelnik, M., & Mladenic, D. (2005, October). A survey of ontology evaluation techniques. In Proceedings of the conference on data mining and data warehouses (SiKDD 2005) (pp. 166-170).

- Ding, L., Tao, J., & McGuinness, D. L. OWL Instance Data Evaluation.

- Ding, L., Tao, J., & McGuinness, D. L. (2008, April). An initial investigation on evaluating semantic web instance data. In Proceedings of the 17th international conference on World Wide Web (pp. 1179-1180). ACM.

- Dumontier, Michel, et al. "The Semanticscience Integrated Ontology (SIO) for biomedical research and knowledge discovery." Journal of biomedical semantics 5.1 (2014): 14

- Gómez-Péreza, A. OOPS!(OntOlogy Pitfall Scanner!): supporting ontology evaluation on-line.

- Guo, M., Liu, Y., Li, J., Li, H., & Xu, B. (2014, May). A knowledge based approach for tackling mislabeled multi-class big social data. In European Semantic Web Conference (pp. 349-363). Springer International Publishing.

- Hlomani, H., & Stacey, D. (2014). Approaches, methods, metrics, measures, and subjectivity in ontology evaluation: A survey. Semantic Web Journal, 1-5.

- McGuinness, D. L., Fikes, R., Rice, J., & Wilder, S. (2000, April). An environment for merging and testing large ontologies. In KR (pp. 483-493).

- McGuinness, D. L., Fikes, R., Rice, J., & Wilder, S. (2000). The chimaera ontology environment. AAAI/IAAI, 2000, 1123-1124.

- Pujara, J., Miao, H., Getoor, L., & Cohen, W. W. (2013). Knowledge graph identification.

- Tao, J., Ding, L., & McGuinness, D. L. (2009, January). Instance data evaluation for semantic web-based knowledge management systems. In System Sciences, 2009. HICSS'09. 42nd Hawaii International Conference on (pp. 1-10). IEEE.

- Tao, J. (2012). Integrity constraints for the semantic web: an owl 2 dl extension (Doctoral dissertation, Rensselaer Polytechnic Institute).

- Valenzuela-Escárcega, Marco A., Gus Hahn-Powell, and Mihai Surdeanu. "Description of the odin event extraction framework and rule language." arXiv preprint arXiv:1509.07513 (2015).

Design

Conceptual Model Overview

We evaluate a large scale Biomedical Knowledge Graph that is constructed from the outputs of a state-of-the-art IE toolkit ODIN. ODIN takes as input, PubMed documents and extracts entities, processes, contexts and passages. Due to the nature of IE toolkits, such outputs do have mis-classified labels, entities and processes. Our goal is to evaluate this output using an ontology by adding semantics back to the output.

We use the output schema of ODIN, the FRIES format as a starting point to define conceptual terms in our ontology. We then add semantics in the form of rdfs and owl:sameAs axioms. Finally, we parse the outputs of ODIN and create our Knowledge Graph, which instantiates the ontology.

Among the evaluations that we do on the Knowledge Graph, we look out for possible inconsistencies. These are classified into different types, which are detailed in the technical report here .

The diagrams below will explain the overarching concepts of the KGES ontology. To use the system, load the latest RDF files from the Ontology section below to a triple store. We recommend using Virtuoso Open Source or the Jena TDB . Virtuoso installation instructions are here . Once the loading process is over, refer to the Demonstration and Queries section and try the sample queries on your endpoint to see the results.

Schema Diagrams

Entity Mention Context

The ontology portion that identifies the extracted entities from the pubmed documents.

Event Mention Context

The ontology portion that identifies each Event Mention, according to the Information Extraction Tool. The schema provides the means to query for exact entity mentions and their attributes.

Passage Context

The ontology portion that identifies the position of the term from the extracted document. All terms are identified separately, as defined by the Information Extraction portion of KGES. Sentences are extracted from the passages, from which entity and event mentions are extracted.

Inconsistency Diagram

The conceptual model of how the inconsistencies are classified is shown below. Several classes of inconsistencies are defined relative to event and entity mentions in terms of disjointness and label mismatch.

Instance Diagrams

Some example instances of extracted terms from the Information Extraction system and how they connect to the rest of the ontology. The inconsistencies are subclass relations that are connected these instance terms. This is an example how a single label from a single sentence can be extracted as multiple mention types. Further details are included in the demonstration and queries section.

p57 Instances

p200 Instances

Ontology Versions

Listed below are some the various iterations of the ontology which were created as a part of the Ontology Engineering class. Older versions are not maintained and are not guaranteed to be functional.

MagicDraw Files

Main Ontologies:

Individuals Ontologies:

RDF Files

Main Ontologies:

Individuals Ontologies:

Demonstration and Queries

Intended Use

The usage of these queries are to answer the various competency questions asked to the ontology. The primary queries issued focus on locating the particular entity or event and determining the potential inconsistencies.

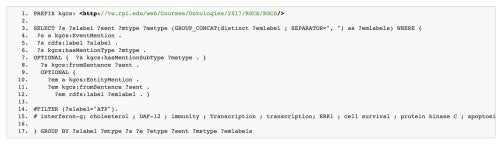

Example Query 1

Question: How many entity mentions are about CLTA-4? What sentences do they come from and what are their extracted mention types? What are equivalent classes to that individual what are the map types for those equivalent classes?

This is an example of the general question: what entity mentions exist of a given label? To get these answers, a SPARQL query is issued to get results on where CTLA-4 was mentioned. A list of 86 Entity Mention results was displayed. Several sentences are showcased below, with the equivalent classes and the map type.

Results: KGES - CTLA-4 Entity Mentions Query Results

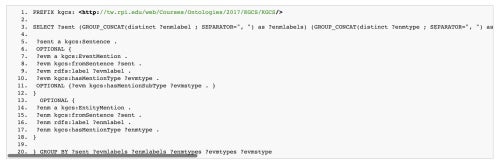

Example Query 2

Question: How many event mentions are about ATP? What sentences do they come from and what are their extracted mention types? What are the extracted mention subtype? What are entity mentions that are also extracted from the given sentence?

Results: KGES - ATP Event Mention Query Results

Example Query 3

Question: What events and entities are extracted from a given sentence? What are their mention types and subtypes?

Results: KGES - Single Sentance Query Results

Class Content

Here are links to the KGES team submissions for assignments of the Spring 2017 Ontology Engineering class (located in our google drive).

- Assignment 12: Ontology Evolution and Maintenance Policies

- Assignment 11: Ontology Evaluation

- Assignment 10: Question Answering in SPARQL

- Assignment 9: Provenance, References, and Evidence Citation Assignment 10: Question Answering in SPARQL

- Assignment 8: Revised Ontology 2

- Assignment 7: Revised Ontology

- Assignment 6: Preliminary Ontology Development

- Assignment 5: Conceptual Model

- Assignment 4/4b

- Assignment 3